I’ve been through a couple of similar technology revolutions, and to be honest, I got them both wrong. The first was the internet — which I greatly underestimated. And the other is BIM (Building Information Modelling), which has made a lot of progress and is more widely used, yet hasn't achieved the industry transformation that we all were predicting.

So, will AI be any different?

I don’t know yet, but at YourQS we aren’t sitting on the sidelines. We have a couple of AI use cases in the R&D pipeline and will be seeing what we can achieve.

What muddies the waters is there is a lot of “jumping on the AI bandwagon” — software systems claiming AI capability for technologies that have existed for years. On this basis we could call our system AI as it creates detailed and accurate costing at the push of a button, but I know that underneath is all structured logic, the same as most of these other systems, so it isn’t AI.

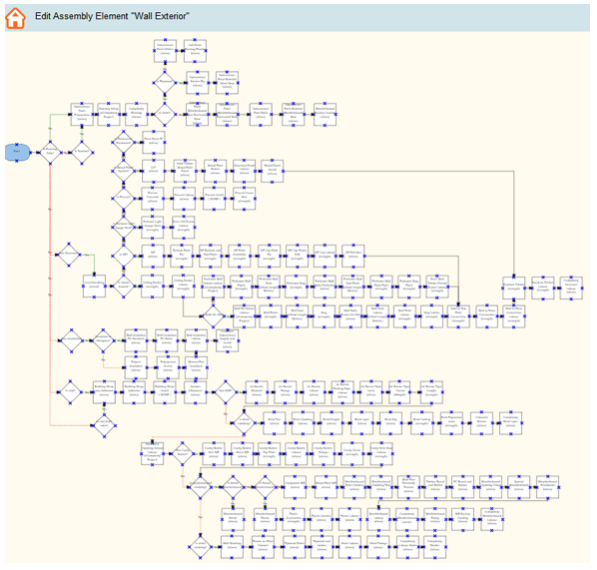

What structured logic means is lots of statements like if X = this, then do this. These can be very, very, complex, and very smart, maybe even look like AI, but this is not AI. For example, here is our logic flow for costing a wall, it is very smart, can handle millions of construction permutations, but is not AI.

AI is defined as “making it possible for machines to learn from experience, adjust to new inputs and perform human-like tasks”. The critical words are “learn from experience”. I don’t believe that me configuring our system do these tasks is the “machine learning,” so it’s not AI.

ChatGPT for example can learn by itself and create new information based on this. What it can do is recognise patterns in the information on a scale beyond what a human can achieve and so create its own “if X = this, then do this” instructions. There are two big limitations with this though, and therefore probably all AI:

- a) It is only as good as the information it is learning from; it needs a lot of data, and rubbish in = rubbish out. AI drift or hallucination, where small mistakes move the AI off track, is also a well-known phenomenon.

- b) It can’t come up with something it has never seen before. It might combine patterns it knows in a new way, but it won’t have that flash of inspiration that humans can.

Much of the current focus of AI is around documentation with tools like ChatGPT, and it can do stunning things. I had a bike crash a little while back and as a result had a session with a concussion clinic. The doctor recorded it, and their AI tool created a set of notes for it. The result was impressive, it picked up subjects that we had talked about at different times through the meeting and collated them into a coherent summary. There is no question for me that AI is a huge aid to any sort of writing task.

One question that came up at a recent Callaghan AI presentation that I attended was “Can AI cost my design?” If it could, this would impact my business, so I have paid a lot of attention to this space. I have seen AI tools where you can teach them the symbol for a say a light switch, and it will then go through a drawing and find all the light switches. That is useful, but you still need to tell it what that light switch is and what it costs. This is hard to automate because the specification for it could be a note next to the symbol, a note somewhere else on the page, a note on a page at the front of the drawing set, in a separate specification document, or not there at all because “everyone knows you use this type of switch on this sort of build”. We’ve explored this, and training an AI with this amount of variability is not practical — yet, anyway.

We trialled an AI tool from France that could take 2D drawings and convert them into 3D walls and rooms so looked promising as it would save us modelling these over the drawings. What we found though was that the time it took to fix the issues like having to split walls that were partly interior and partly exterior or correcting windows and doors as it couldn’t know the heights, was about the same as us modelling it ourselves. It did have a smart feature that created rooms from the walls, but that really isn’t AI, ArchiCAD has had this for decades.

A few weeks back the launch of the Chinese owned Deep Seek Ai caused a stir with leading US AI companies losing billions of dollars of value overnight. Does this change things for us? The reason the market reacted the way it did was because Deep Seek were able to use older generation computer chips for training their AI, making their offer cheaper and bringing into question the need for the newest chips from Nvidia and the like. I read that Deep Seek were able to reduce the cost of training a large language AI model to “only US$5.5million,” saving about $1.5m. That gives you an idea of the scale of the data requirements needed for AI which clearly shows why they are not going to be suitable for every application.

Deep Seek and ChatGPT are what are called Large Language Models, LLMs, which means they can comprehend and generate natural language text. As users of these technologies, we benefit from the training investment made by others to help us with our text-based challenges. This does mean that our results are dependent on the training done by others which will include their cultural norms, opinions, biases, censorships, mistakes, and so on.

By submitting your content to AI engines, you are exposing it to unknown other parties so be aware of how you use them. There are also questions about IP ownership; if your answer is based on something that is copyrighted but used to train the AI, who owns the rights? There is speculation that Deep Seek used some ChatGPT content in its training, maybe in breach of their terms of use. Could ChatGPT be doing the same with your content? It is near impossible for us to know.

Does that mean AI is a waste of time? Absolutely not. There are, and will be, plenty of situations where being able to identify optimal patterns from apparently disparate information will create intelligent insights which may even be very creative. For me, the jury is out on if Chat Bots help or hinder the support process, but we are increasingly seeing them provide a first line of support. Creating summaries from large documents or meetings, creating captions or summaries for videos, identifying things in images, and the like are becoming common uses. People are using them for creative writing and generating images, but this has resulted in a new term “AI slop”, where the text or picture sort of work, but not quite.

That is why the best applications of AI still need some form of human oversight, being a productivity aid rather than an originator. I’m sure that over time the amount of this oversight will reduce to the point where AI may well get close to the creativity potential that many are imagining, but this will be a long way off yet.

So, is AI going to take over everything as we keep hearing? No.

Will AI have a big impact? Yes.

Will your job go? Maybe.

Will it change? Definitely.

Should you believe me? Well, my strike rate on these big technology shifts is 100% wrong so far, so maybe, maybe not.

No AI was harmed in the writing of this article (as I wrote it myself).

If you are interested in exploring how to start with AI, here is an article from a friend of mine in the UK:

Getting Started with AI: Key Fundamentals for Construction Professionals | CIOB

About Nick Clements

Nick won the inaugural NZIOB 2024 Digital Technology award for his work on 3D estimating in residential construction. He is a Member of the NZIQS and is vice chair of the Auckland committee.

His business, YourQS, specialises in providing cost estimating services to residential builders, architects, and homeowners for both new build and renovation projects. They have completed over 3,200 projects since launching in 2019 working for around 300 builders nationwide.

Nick is the host of the Beyond the Guesstimate podcast where he talks with interesting people ideas on how we can improve as an industry and as businesses within it.

Most Popular

Most Popular Popular Products

Popular Products